Guidance & Governance

This section introduces a practical framework to guide AI usage during the pilot while laying the foundation for enterprise-wide governance. It recognizes that acceptable AI use depends on context, not just capability.

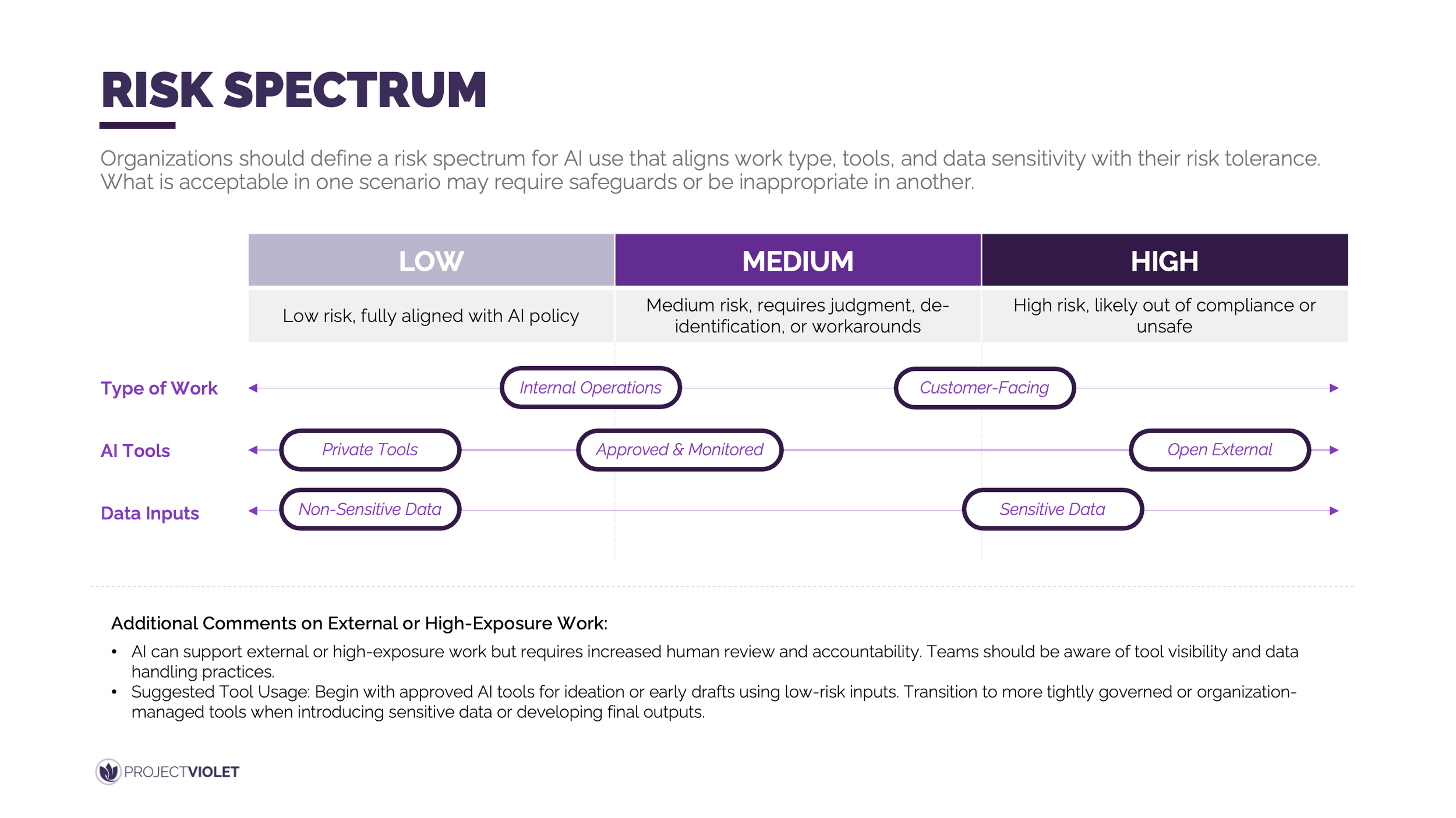

The risk spectrum provides a shared lens for evaluating AI use across the organization by aligning type of work, tools, and data sensitivity with risk tolerance. Rather than treating AI as universally allowed or prohibited, this approach enables teams to make informed decisions based on exposure, impact, and accountability. For pilot participants, it offers clear guidance on where experimentation is encouraged, where judgment is required, and where safeguards or restrictions apply. For the broader organization, it establishes a common starting point that can be refined into formal policies, tool access rules, and review requirements over time.

In practice, teams begin by assessing the nature of the work their function performs, the AI tools they are authorized to use, and the sensitivity of the data involved. This assessment helps distinguish low-risk internal experimentation from higher-risk, customer-facing or sensitive use cases that demand tighter controls and human review. As the pilot progresses, these patterns and decisions inform scalable governance rules that can be consistently applied across functions in the next phase of rollout.

Decision-Making Framework for AI Use

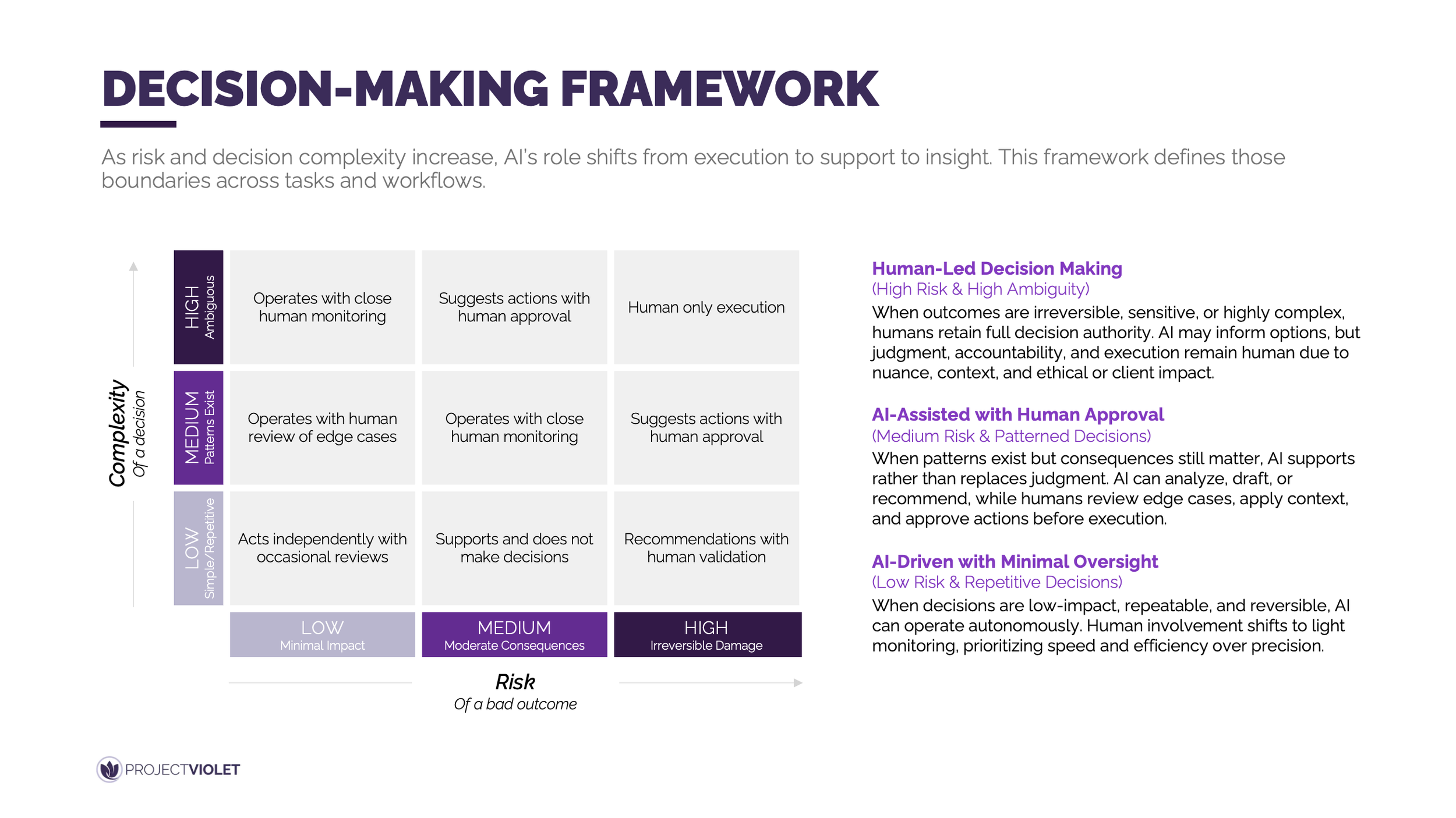

This framework provides shared guidance for how AI should be used across different types of work based on risk and decision complexity. As potential impact increases, AI’s role intentionally shifts from execution to support to insight.

Rather than leaving AI usage to individual discretion, this model helps teams make consistent, defensible decisions about when AI can act independently, when it should assist, and when work should remain human-led. The core principle is that AI involvement must scale down as ambiguity, sensitivity, and irreversibility increase. Low-risk, repetitive tasks can be automated or AI-driven to maximize speed and efficiency. As consequences grow, AI moves into an advisory role, supporting analysis, drafting, or pattern recognition while humans retain approval and accountability. In the highest-risk scenarios, AI may inform thinking, but execution and judgment remain fully human due to ethical, reputational, or client impact.

In practice, this framework gives pilot participants a clear mental model for navigating real workflows without overcorrecting toward blanket restrictions. It reinforces that responsible AI use is not about avoiding AI, but about matching its role to the stakes of the outcome. These decision boundaries also serve as a foundation for scalable governance, informing future standards for review, audit, and acceptable use as AI adoption expands across the organization.

Sample AI Usage Across a Workflow

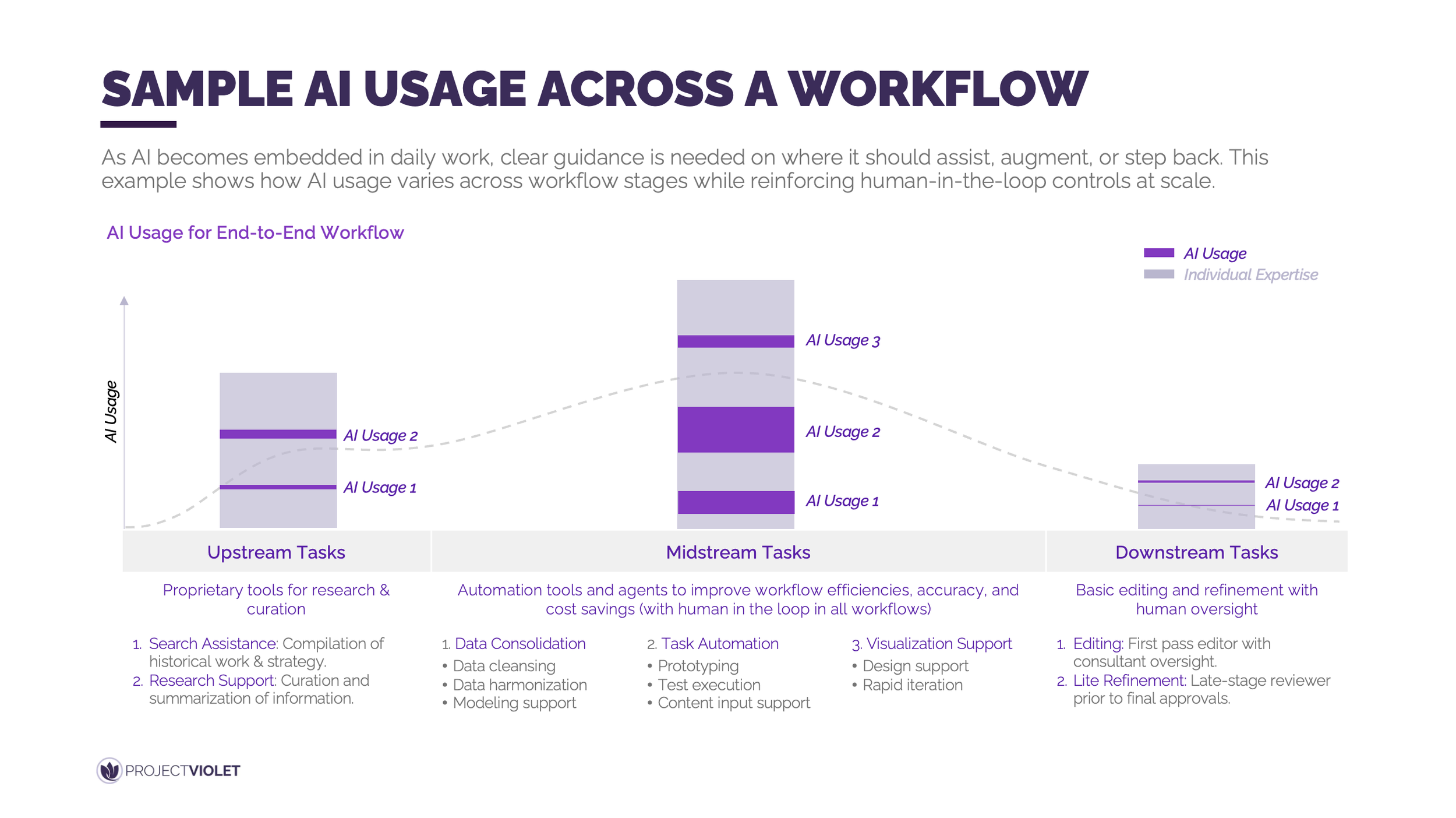

This example illustrates how AI usage can vary across an end-to-end workflow, rather than being applied uniformly. It reinforces that AI should assist, augment, or step back depending on the stage of work and the nature of the outcome.

The intent is to move from abstract guidance to concrete, role-specific examples that help teams apply judgment consistently. By mapping AI usage across upstream, midstream, and downstream tasks, organizations can clarify where AI adds leverage and where human expertise must remain dominant. In early stages, AI can support research, information curation, and pattern discovery with relatively low risk. In the middle of the workflow, AI often plays its most powerful role by supporting automation, analysis, and iterative development, while maintaining clear human-in-the-loop controls. As work approaches final outputs and approvals, AI use intentionally tapers, shifting toward light editing and refinement under direct human oversight.

In practice, these examples should be developed by functional area, reflecting the specific work, tools, and risks each team faces. Supervisors play a critical role in defining these patterns with their teams, ensuring expectations are clear and aligned with accountability. Over time, these workflow-level examples become durable reference points that help pilot participants work confidently today and inform standardized AI usage guidance across the organization tomorrow.

Return to the table of contents or use the navigation below to continue.

Looking for more support?

Leadership Lab: Learn alongside other leaders as you apply AI in real workflows, share lessons learned, and build the leadership skills needed to guide teams through change. Explore Leadership Lab

AI Pilot Program: Partner with us to design and launch a practical AI pilot program tailored to your organization. Learn More