Pilot Setup

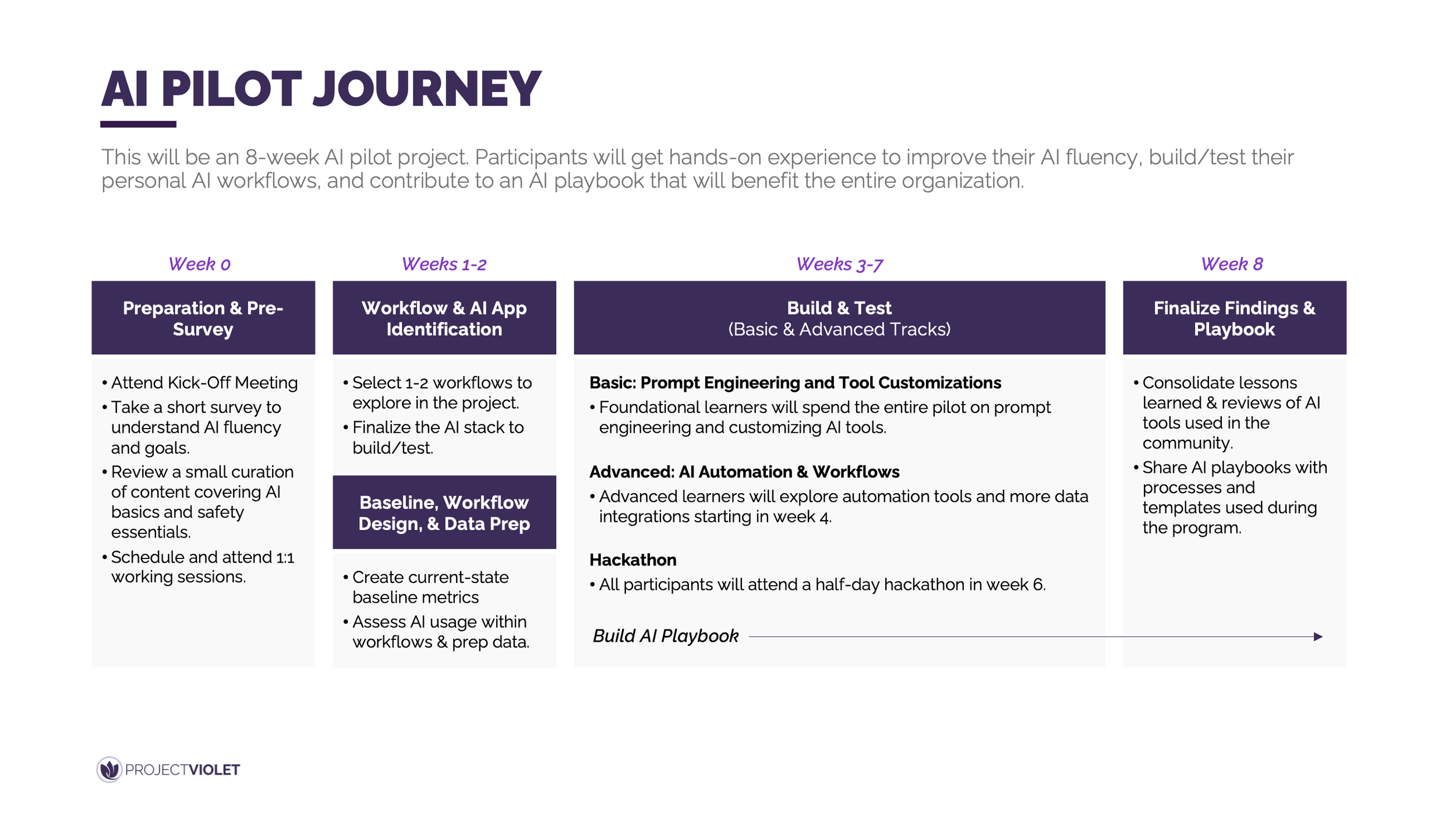

This eight-week AI pilot is designed to build real AI fluency through hands-on experimentation. Participants improve their skills, test personal workflows, and contribute directly to an organizational AI playbook.

Rather than treating AI as abstract training, the pilot is structured as a working journey from preparation to production-ready insights. It begins with baseline assessments and foundational content to establish shared language, expectations, and safety norms. Teams then identify priority workflows, assess current-state performance, and prepare data so experimentation is grounded in real work. The core of the program focuses on building and testing AI solutions across both foundational and advanced tracks, reinforced by a mid-pilot hackathon that accelerates learning through collaboration and pressure-tested use cases.

In practice, this approach ensures participants leave with more than individual skills. They produce validated workflows, documented lessons, and reusable templates that feed directly into a broader AI playbook. This creates a clear handoff from pilot learning to enterprise enablement, setting the stage for scaled adoption and more formal governance in the next phase.

Pre-Pilot Baseline & Readiness Assessment

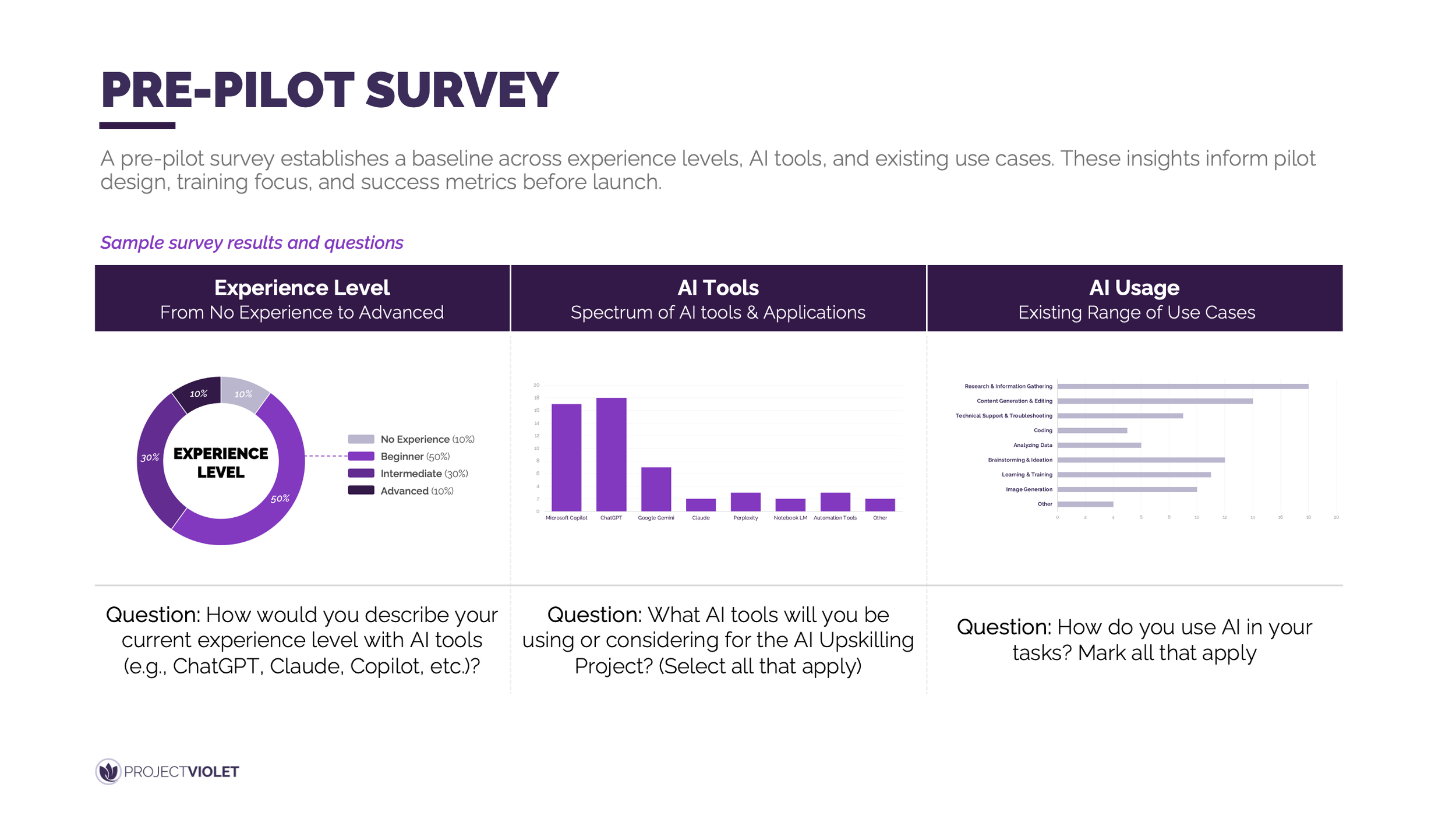

A pre-pilot survey establishes a clear baseline across experience levels, AI tools, and existing use cases. These insights shape pilot design, training focus, and success measures before launch.

By grounding the pilot in real data, organizations avoid generic training and instead tailor the journey to how teams are actually using AI today. The survey captures the full spectrum of AI maturity, from first-time users to advanced practitioners, and highlights which tools are already in circulation across the organization. It also surfaces where AI is being applied in daily work, such as research, content creation, analysis, or technical tasks, revealing both concentration areas and gaps. This baseline enables more intentional cohort design, clearer segmentation between foundational and advanced tracks, and more realistic outcome expectations.

In practice, the pre-pilot survey becomes the reference point for measuring progress. It informs which workflows to prioritize, where to invest deeper coaching, and how to define meaningful improvements by the end of the pilot. This sets up the next phase of the journey, where teams move from assessment into focused workflow and use case identification.

Sample Survey Questions:

How would you describe your current level of experience using AI tools (e.g., ChatGPT, Copilot, Claude)?

(No experience, Beginner, Intermediate, Advanced)Which AI tools are you currently using or planning to use as part of this pilot?

(Select all that apply)How frequently do you use AI tools in your day-to-day work today?

(Never, Occasionally, Weekly, Daily)For which types of tasks do you currently use AI?

(Select all that apply, e.g., research, content generation, data analysis, coding, learning and training)What are you hoping to improve or achieve by participating in this AI pilot?

(Open-ended)

Pilot AI Stack & Guardrails

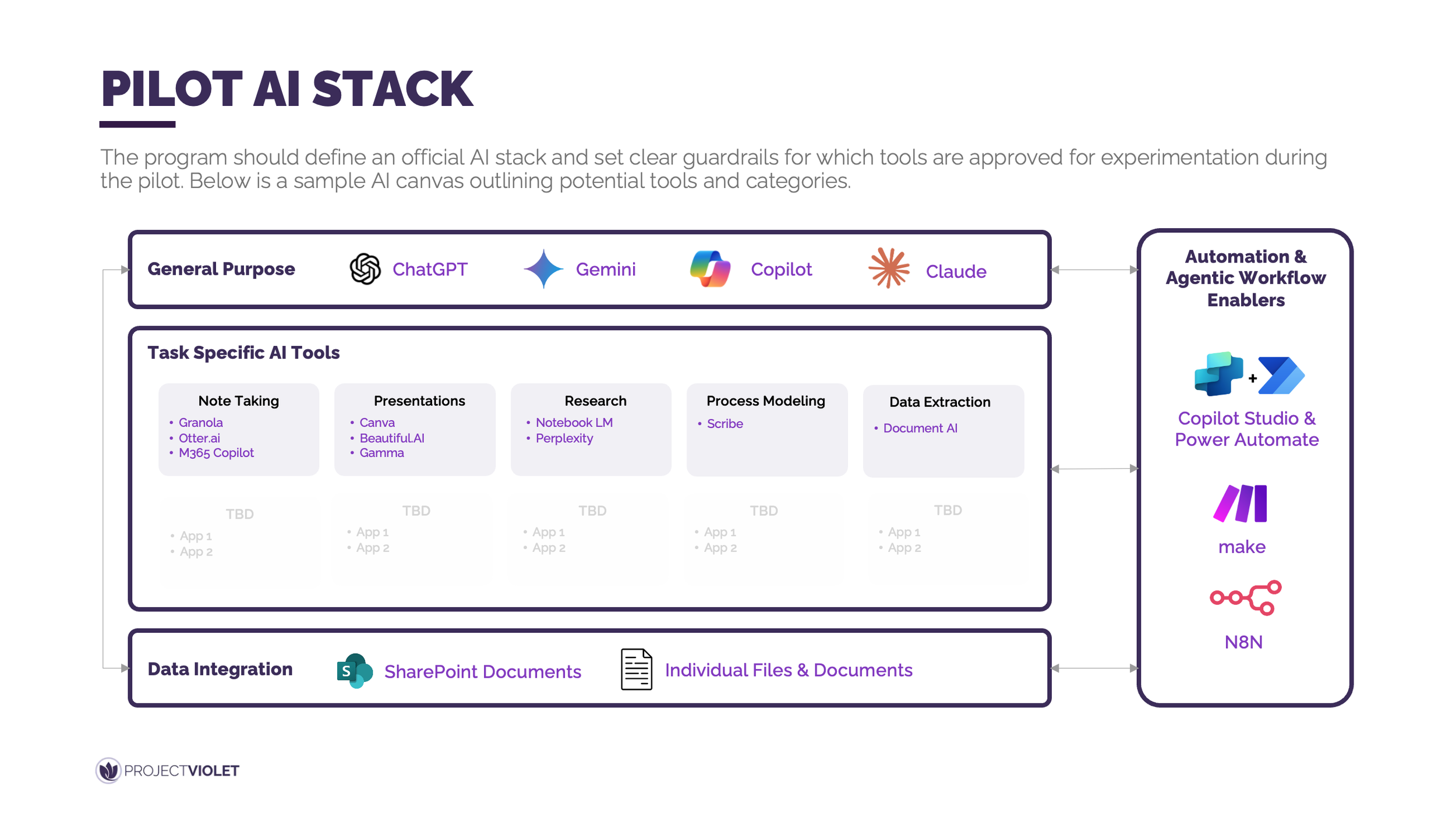

The pilot defines an official AI stack and establishes clear guardrails for which tools are approved for experimentation. This ensures participants can move quickly while operating within shared standards for safety, consistency, and compliance.

A defined pilot stack reduces friction by giving teams clarity on where to experiment and confidence that their work aligns with organizational expectations. Rather than allowing ad hoc tool sprawl, the stack intentionally combines general-purpose AI, task-specific tools, data sources, and automation enablers into a coherent canvas. This structure encourages practical exploration across common workflows such as research, content creation, process modeling, and analysis, while limiting risk by constraining data access and tool usage. Just as importantly, it creates comparability across participants, making lessons learned easier to synthesize and scale.

In practice, the pilot stack serves as both a learning boundary and a design accelerator. Participants spend less time deciding which tools to try and more time testing meaningful use cases within approved categories. The outputs from this experimentation feed directly into stack recommendations, usage guidelines, and future governance decisions, setting up the transition from pilot experimentation to repeatable, enterprise-ready patterns in the next phase.

Return to the table of contents or use the navigation below to continue.

Looking for more support?

Leadership Lab: Learn alongside other leaders as you apply AI in real workflows, share lessons learned, and build the leadership skills needed to guide teams through change. Explore Leadership Lab

AI Pilot Program: Partner with us to design and launch a practical AI pilot program tailored to your organization. Learn More