Core Team Design

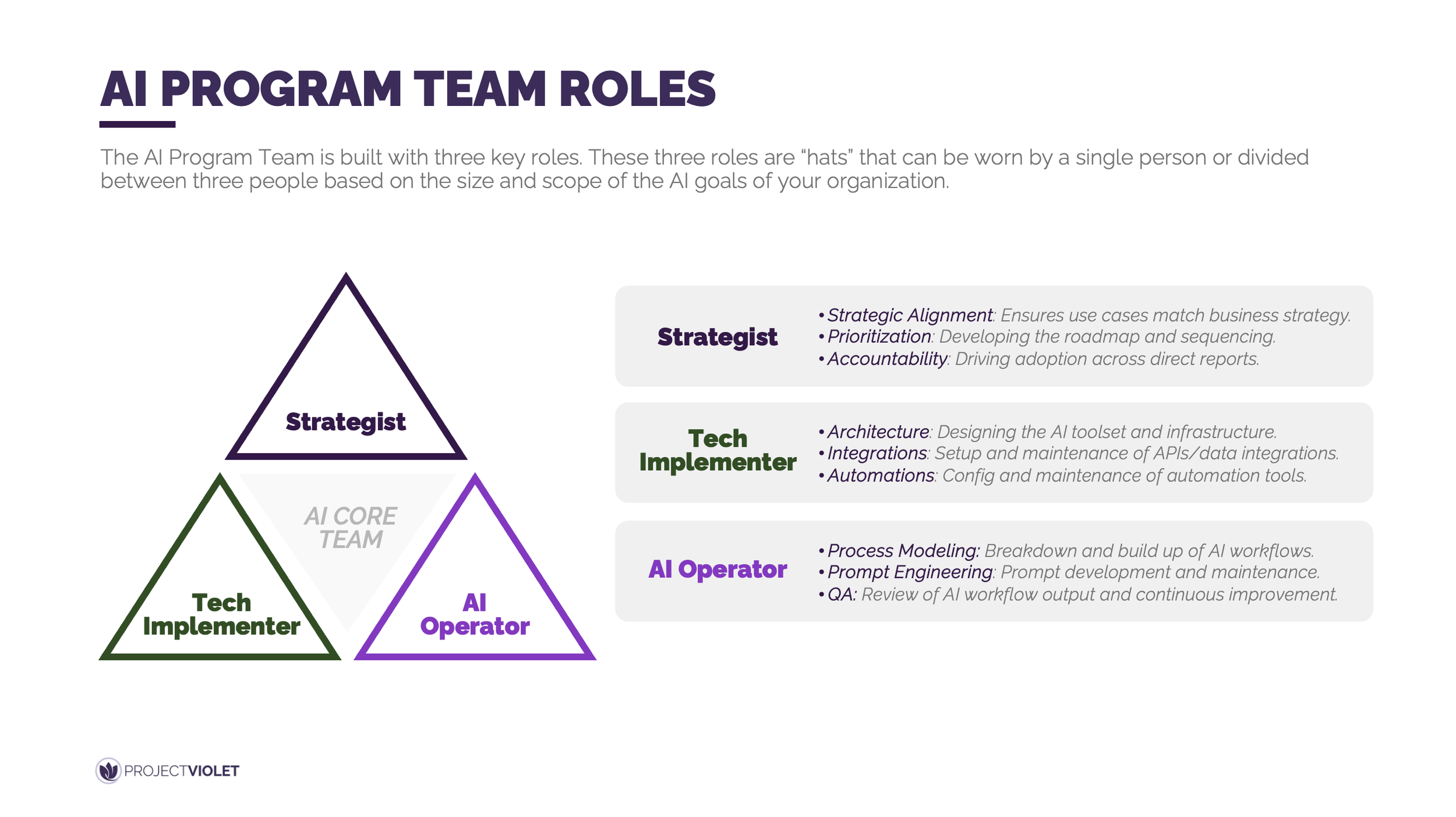

The AI Program Team is anchored around three core roles that act as complementary hats rather than rigid job titles. These roles can be combined or separated based on the scale, maturity, and ambition of an organization’s AI efforts.

High-performing AI programs work because strategic intent, technical enablement, and operational execution are clearly owned and deliberately connected. The strategist sets direction by aligning AI initiatives to business priorities, sequencing the roadmap, and driving accountability for adoption. The tech implementer focuses on architecture, integrations, and automation reliability to ensure solutions can scale. The AI operator brings these efforts to life by designing workflows, maintaining prompts, and continuously improving quality through hands-on execution and review.

In practice, this role clarity prevents common breakdowns such as disconnected experimentation or over-engineered solutions with low adoption. Smaller teams may consolidate responsibilities early on, while larger organizations benefit from sharper ownership as complexity increases. This structure provides the foundation for determining how the team should be deployed across the organization.

Scaling the AI Program Across the Organization

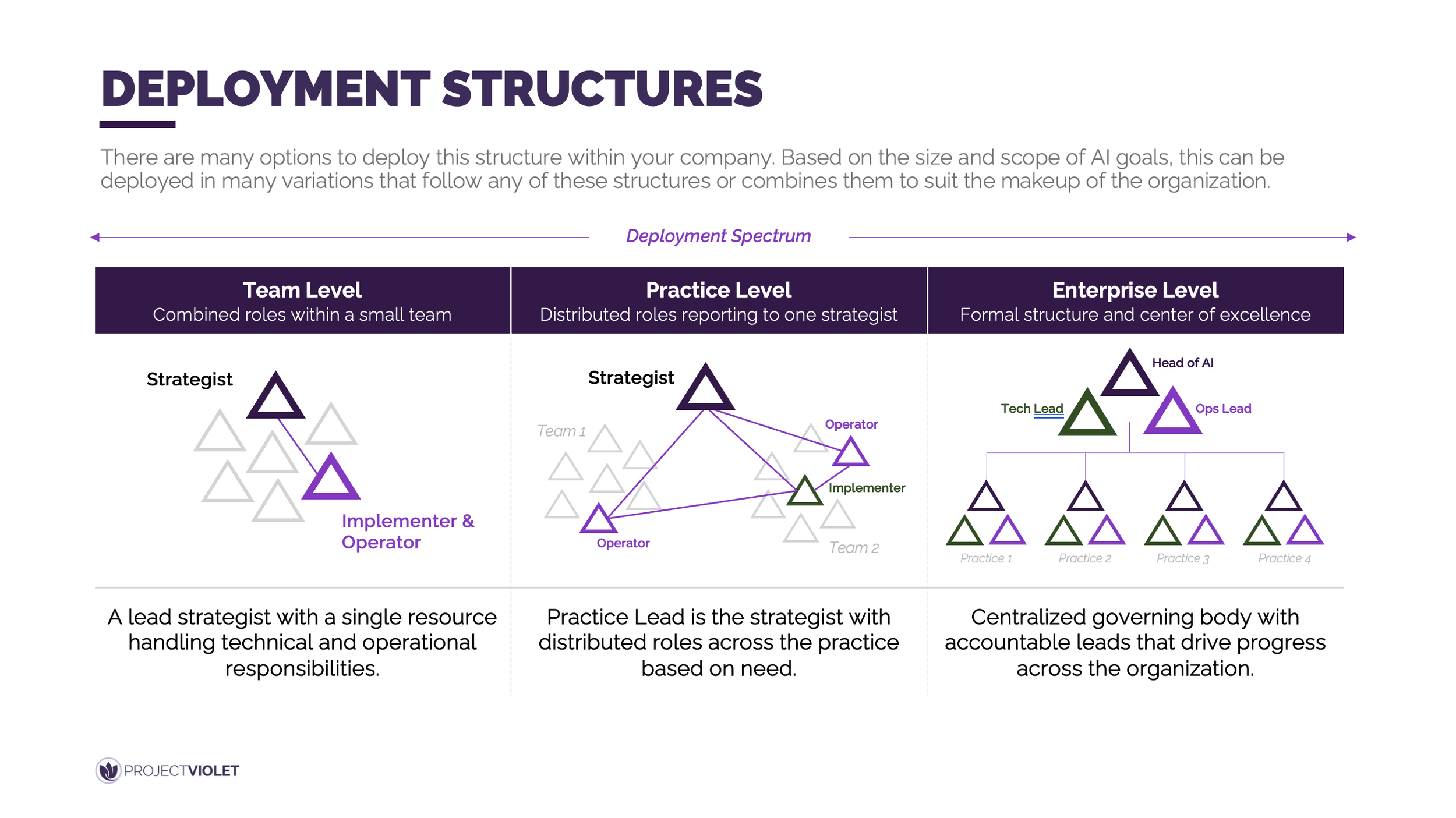

AI program structures can be deployed in several ways, depending on organizational size, operating model, and desired pace of adoption. These approaches exist along a spectrum, from lean team-level setups to fully centralized enterprise models.

The right deployment model aligns decision-making authority and accountability with the scale of AI impact being pursued. At the team level, roles are often combined to maximize speed and focus, with a single strategist supported by hands-on execution. At the practice level, responsibilities are distributed across teams but coordinated through a central strategic lead. At the enterprise level, a formal governance structure and center of excellence emerge, with dedicated leaders driving consistency, standards, and progress across practices.

In reality, most organizations move along this spectrum over time rather than selecting a single end state upfront. Early wins in smaller deployments create confidence and momentum for broader rollout. This evolution naturally introduces the need for clearer governance, shared standards, and scalable operating models.

Sustained Adoption with the AI Operator

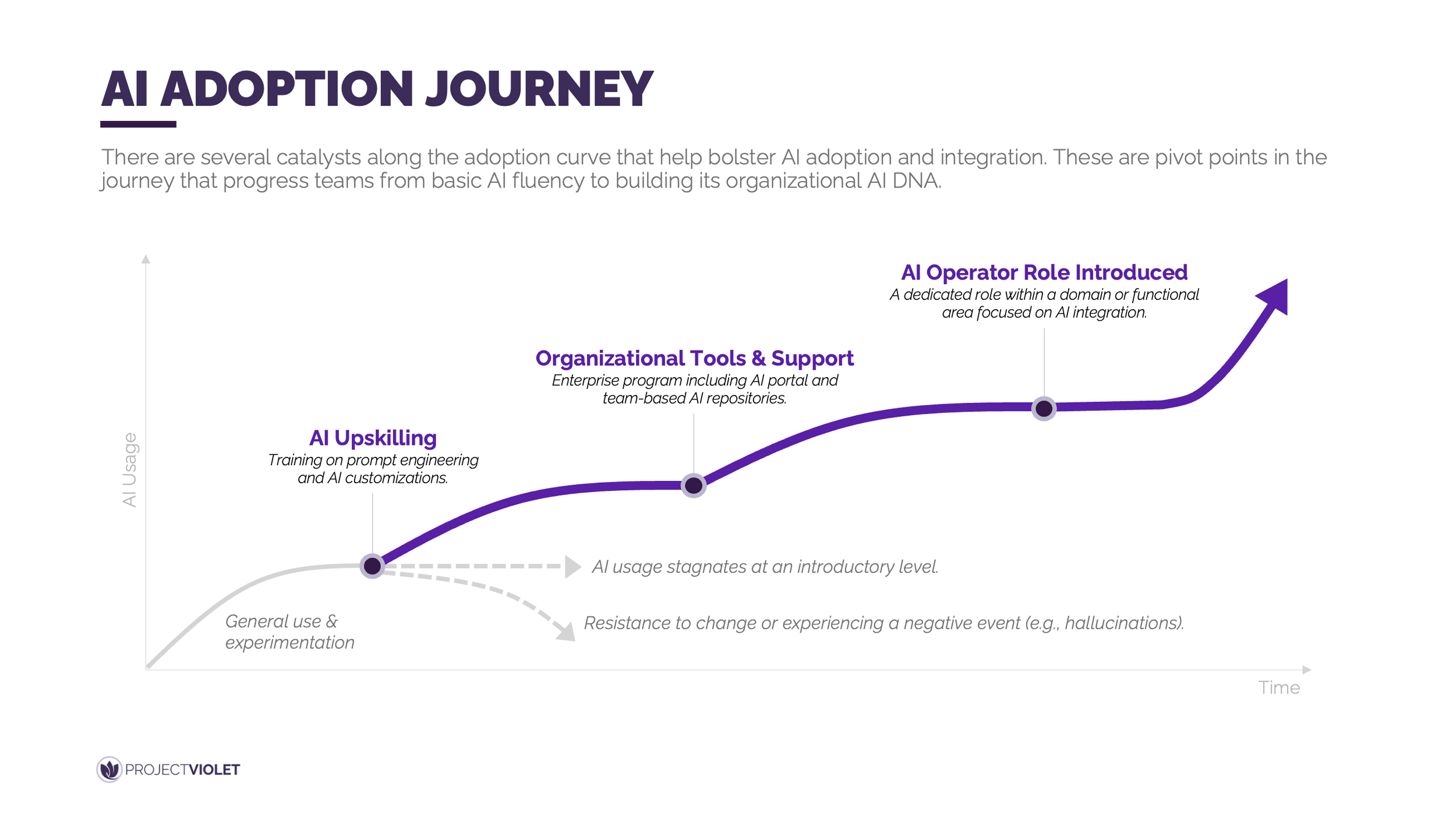

AI adoption typically begins with general experimentation and early productivity gains, followed by a plateau where usage stalls at a basic level. Moving beyond this point requires intentional catalysts that shift AI from a personal tool to an organizational capability.

The introduction of a dedicated AI Operator role is the inflection point where AI adoption accelerates from sporadic use to sustained, compounding impact. The AI Operator is responsible for translating business needs into repeatable workflows, maintaining prompts and configurations, and ensuring output quality through continuous iteration. Unlike early upskilling efforts that focus on individual capability, this role creates shared assets, reduces friction, and embeds AI directly into how work gets done. When paired with organizational tools such as AI portals and shared repositories, the operator enables teams to build on each other’s progress rather than restarting from scratch.

In practice, adoption takes off when the AI Operator role is formally assigned and given sufficient time and mandate to operate. This can be a dedicated position or a clearly defined responsibility within an existing role, but it cannot be an afterthought. Establishing this role closes the gap between learning and execution and marks the transition from AI experimentation to an organization’s durable AI operating rhythm.

Return to the table of contents or use the navigation below to continue.

Looking for more support?

Leadership Lab: Learn alongside other leaders as you apply AI in real workflows, share lessons learned, and build the leadership skills needed to guide teams through change. Explore Leadership Lab

AI Pilot Program: Partner with us to design and launch a practical AI pilot program tailored to your organization. Learn More