Pilot Execution

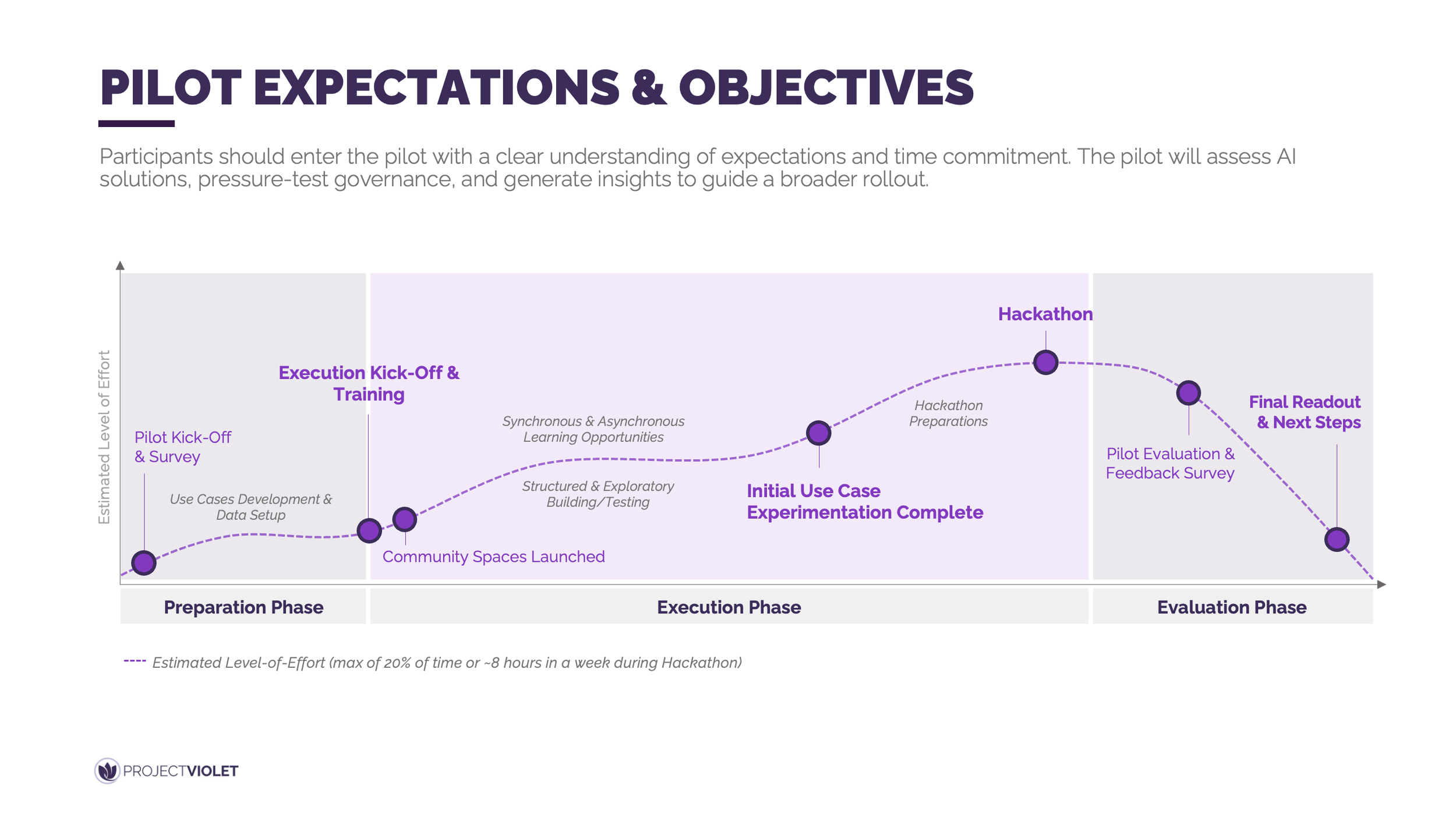

Participants should enter the pilot with a clear understanding of expectations, time commitment, and outcomes. The pilot is designed to assess AI solutions, pressure-test governance, and inform a broader enterprise rollout.

At its core, the pilot balances structured learning with hands-on experimentation to generate real signals about what works, what does not, and what needs refinement before scaling. Participants move through a defined preparation, execution, and evaluation journey that combines training, community engagement, and applied use case development. The effort level is intentionally bounded so teams can participate alongside their day-to-day responsibilities while still producing meaningful outputs. This clarity upfront helps align participants, managers, and sponsors around why the pilot exists and how success will be measured.

In practice, this means participants are not just learning tools but actively testing workflows, sharing lessons, and contributing feedback that shapes future decisions. Activities like use case experimentation, hackathons, and structured evaluations create shared artifacts and insights the organization can reuse. These outputs then become the foundation for decisions on governance, tooling, and the next phase of rollout.

Building a Culture of Shared Learning

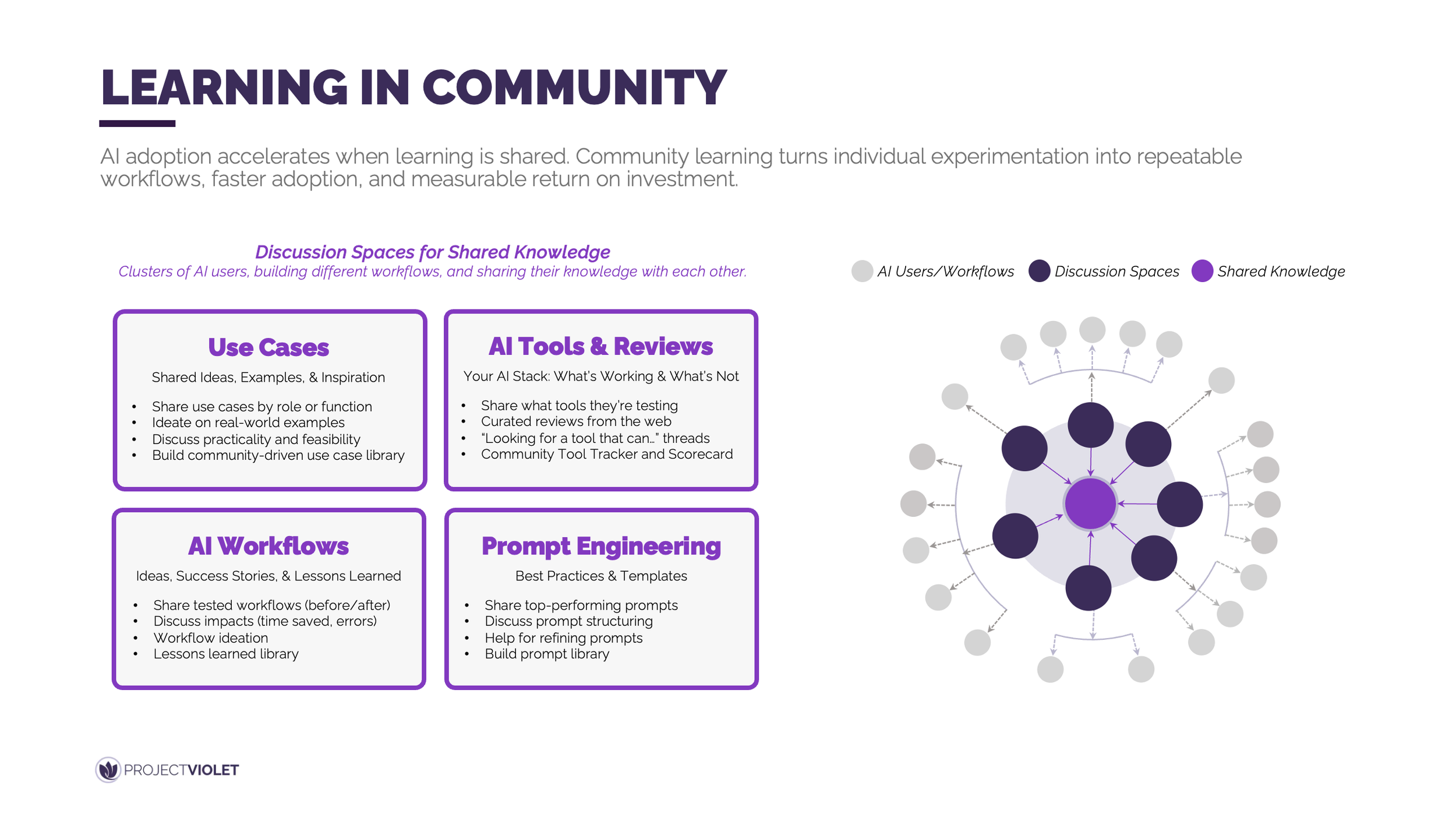

AI adoption accelerates when learning is shared rather than siloed. Establishing community spaces during the pilot sets the expectation that progress comes from contribution, transparency, and collective problem solving.

Community spaces are not a side feature of the pilot, they are the foundation for creating a culture of sharing that makes AI adoption scalable and sustainable. When these spaces are launched early, participants see that experimentation, lessons learned, and even failures are meant to be visible and reusable. This shifts behavior away from hoarding knowledge and toward building shared assets such as prompts, workflows, and use cases. Over time, this shared body of knowledge becomes a force multiplier that shortens learning curves and improves outcomes across teams.

In practice, this requires intentional ownership from the core AI team, not passive moderation. The team must actively contribute, highlight strong examples, and model the behaviors they want to see. It also means selecting pilot participants who are naturally inclined toward collaborative learning and peer sharing. These early contributors become the nucleus of the future community, creating momentum that carries forward into the broader enterprise rollout.

Assessment Frameworks for AI Pilots

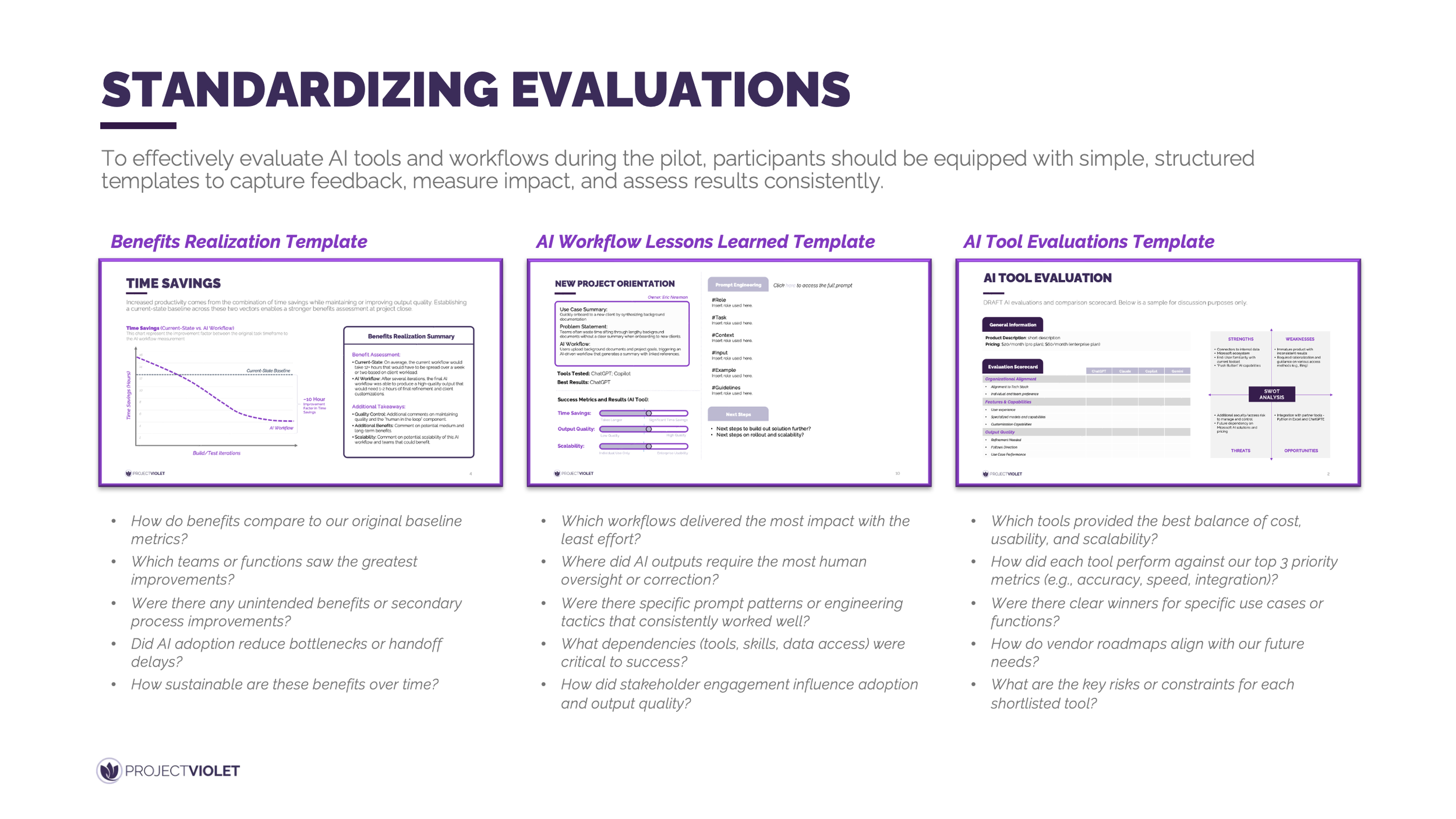

To effectively evaluate AI tools and workflows during the pilot, participants need simple, structured ways to capture feedback and assess outcomes. Providing common templates ensures insights are comparable and actionable across teams.

Standardized evaluation templates create a shared language for measuring impact, validating tools, and pressure-testing governance decisions before scaling. Without this structure, feedback becomes anecdotal and difficult to aggregate, limiting its value for leadership decision-making. Consistent templates help participants assess time savings, output quality, scalability, and risk using the same criteria. This makes it possible to identify real patterns across tools and workflows rather than isolated success stories.

In practice, these templates guide participants to reflect on what worked, what required heavy human oversight, and where guardrails were effective or insufficient. They also enable the core team to synthesize results into clear recommendations for future AI stacks and operating models. By the end of the pilot, these assessments form a credible evidence base to inform broader rollout decisions and governance design.

Return to the table of contents or use the navigation below to continue.

Looking for more support?

Leadership Lab: Learn alongside other leaders as you apply AI in real workflows, share lessons learned, and build the leadership skills needed to guide teams through change.